llama-cpp-python : インストール2024/02/16 |

|

Meta の Llama (Large Language Model Meta AI) モデルのインターフェースである [llama.cpp] の Python バインディング [llama-cpp-python] をインストールします。 |

|

| [1] | |

| [2] | その他必要なパッケージをインストールしておきます。 |

|

[root@dlp ~]# dnf -y install python3-pip python3-devel gcc gcc-c++ make jq |

| [3] | 任意の一般ユーザーでログインして、[llama-cpp-python] インストール用の Python 仮想環境を準備します。 |

|

[cent@dlp ~]$ python3 -m venv --system-site-packages ~/llama [cent@dlp ~]$ source ~/llama/bin/activate (llama) [cent@dlp ~]$ |

| [4] | [llama-cpp-python] をインストールします。 |

|

(llama) [cent@dlp ~]$ pip3 install llama-cpp-python[server]

Collecting llama-cpp-python[server]

Downloading llama_cpp_python-0.2.44.tar.gz (36.6 MB)

Installing build dependencies ... done

Getting requirements to build wheel ... done

Installing backend dependencies ... done

Preparing wheel metadata ... done

.....

.....

Successfully installed MarkupSafe-2.1.5 annotated-types-0.6.0 anyio-4.2.0 click-8.1.7 diskcache-5.6.3 exceptiongroup-1.2.0 fastapi-0.109.2 h11-0.14.0 jinja2-3.1.3 llama-cpp-python-0.2.44 numpy-1.26.4 pydantic-2.6.1 pydantic-core-2.16.2 pydantic-settings-2.1.0 python-dotenv-1.0.1 sniffio-1.3.0 sse-starlette-2.0.0 starlette-0.36.3 starlette-context-0.3.6 typing-extensions-4.9.0 uvicorn-0.27.1

|

| [5] |

[llama.cpp] で使用可能な GGUF 形式のモデルをダウンロードして、[llama-cpp-python] を起動します。 ⇒ https://huggingface.co/TheBloke/Llama-2-13B-chat-GGUF/tree/main ⇒ https://huggingface.co/TheBloke/Llama-2-70B-Chat-GGUF/tree/main |

|

(llama) [cent@dlp ~]$

(llama) [cent@dlp ~]$ wget https://huggingface.co/TheBloke/Llama-2-13B-chat-GGUF/resolve/main/llama-2-13b-chat.Q4_K_M.gguf python3 -m llama_cpp.server --model ./llama-2-13b-chat.Q4_K_M.gguf --host 0.0.0.0 --port 8000 &

(llama) [cent@dlp ~]$ llama_model_loader: loaded meta data with 19 key-value pairs and 363 tensors from ./llama-2-13b-chat.Q4_K_M.gguf (version GGUF V2)

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = llama

llama_model_loader: - kv 1: general.name str = LLaMA v2

llama_model_loader: - kv 2: llama.context_length u32 = 4096

llama_model_loader: - kv 3: llama.embedding_length u32 = 5120

llama_model_loader: - kv 4: llama.block_count u32 = 40

llama_model_loader: - kv 5: llama.feed_forward_length u32 = 13824

llama_model_loader: - kv 6: llama.rope.dimension_count u32 = 128

llama_model_loader: - kv 7: llama.attention.head_count u32 = 40

llama_model_loader: - kv 8: llama.attention.head_count_kv u32 = 40

llama_model_loader: - kv 9: llama.attention.layer_norm_rms_epsilon f32 = 0.000010

llama_model_loader: - kv 10: general.file_type u32 = 15

llama_model_loader: - kv 11: tokenizer.ggml.model str = llama

llama_model_loader: - kv 12: tokenizer.ggml.tokens arr[str,32000] = ["<unk>", "<s>", "</s>", "<0x00>", "<...

llama_model_loader: - kv 13: tokenizer.ggml.scores arr[f32,32000] = [0.000000, 0.000000, 0.000000, 0.0000...

llama_model_loader: - kv 14: tokenizer.ggml.token_type arr[i32,32000] = [2, 3, 3, 6, 6, 6, 6, 6, 6, 6, 6, 6, ...

llama_model_loader: - kv 15: tokenizer.ggml.bos_token_id u32 = 1

llama_model_loader: - kv 16: tokenizer.ggml.eos_token_id u32 = 2

llama_model_loader: - kv 17: tokenizer.ggml.unknown_token_id u32 = 0

llama_model_loader: - kv 18: general.quantization_version u32 = 2

llama_model_loader: - type f32: 81 tensors

llama_model_loader: - type q4_K: 241 tensors

llama_model_loader: - type q6_K: 41 tensors

llm_load_vocab: special tokens definition check successful ( 259/32000 ).

llm_load_print_meta: format = GGUF V2

llm_load_print_meta: arch = llama

llm_load_print_meta: vocab type = SPM

llm_load_print_meta: n_vocab = 32000

llm_load_print_meta: n_merges = 0

llm_load_print_meta: n_ctx_train = 4096

llm_load_print_meta: n_embd = 5120

llm_load_print_meta: n_head = 40

llm_load_print_meta: n_head_kv = 40

llm_load_print_meta: n_layer = 40

llm_load_print_meta: n_rot = 128

llm_load_print_meta: n_embd_head_k = 128

llm_load_print_meta: n_embd_head_v = 128

llm_load_print_meta: n_gqa = 1

llm_load_print_meta: n_embd_k_gqa = 5120

llm_load_print_meta: n_embd_v_gqa = 5120

llm_load_print_meta: f_norm_eps = 0.0e+00

llm_load_print_meta: f_norm_rms_eps = 1.0e-05

llm_load_print_meta: f_clamp_kqv = 0.0e+00

llm_load_print_meta: f_max_alibi_bias = 0.0e+00

llm_load_print_meta: n_ff = 13824

llm_load_print_meta: n_expert = 0

llm_load_print_meta: n_expert_used = 0

llm_load_print_meta: rope scaling = linear

llm_load_print_meta: freq_base_train = 10000.0

llm_load_print_meta: freq_scale_train = 1

llm_load_print_meta: n_yarn_orig_ctx = 4096

llm_load_print_meta: rope_finetuned = unknown

llm_load_print_meta: model type = 13B

llm_load_print_meta: model ftype = Q4_K - Medium

llm_load_print_meta: model params = 13.02 B

llm_load_print_meta: model size = 7.33 GiB (4.83 BPW)

llm_load_print_meta: general.name = LLaMA v2

llm_load_print_meta: BOS token = 1 '<s>'

llm_load_print_meta: EOS token = 2 '</s>'

llm_load_print_meta: UNK token = 0 '<unk>'

llm_load_print_meta: LF token = 13 '<0x0A>'

llm_load_tensors: ggml ctx size = 0.14 MiB

llm_load_tensors: CPU buffer size = 7500.85 MiB

warning: failed to mlock 92905472-byte buffer (after previously locking 0 bytes): Cannot allocate memory

Try increasing RLIMIT_MEMLOCK ('ulimit -l' as root).

....................................................................................................

llama_new_context_with_model: n_ctx = 2048

llama_new_context_with_model: freq_base = 10000.0

llama_new_context_with_model: freq_scale = 1

llama_kv_cache_init: CPU KV buffer size = 1600.00 MiB

llama_new_context_with_model: KV self size = 1600.00 MiB, K (f16): 800.00 MiB, V (f16): 800.00 MiB

llama_new_context_with_model: CPU input buffer size = 15.01 MiB

llama_new_context_with_model: CPU compute buffer size = 200.00 MiB

llama_new_context_with_model: graph splits (measure): 1

AVX = 1 | AVX_VNNI = 0 | AVX2 = 1 | AVX512 = 0 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 0 | SSE3 = 1 | SSSE3 = 1 | VSX = 0 | MATMUL_INT8 = 0 |

Model metadata: {'tokenizer.ggml.unknown_token_id': '0', 'tokenizer.ggml.eos_token_id': '2', 'general.architecture': 'llama', 'llama.context_length': '4096', 'general.name': 'LLaMA v2', 'llama.embedding_length': '5120', 'llama.feed_forward_length': '13824', 'llama.attention.layer_norm_rms_epsilon': '0.000010', 'llama.rope.dimension_count': '128', 'llama.attention.head_count': '40', 'tokenizer.ggml.bos_token_id': '1', 'llama.block_count': '40', 'llama.attention.head_count_kv': '40', 'general.quantization_version': '2', 'tokenizer.ggml.model': 'llama', 'general.file_type': '15'}

INFO: Started server process [28738]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://0.0.0.0:8000 (Press CTRL+C to quit)

|

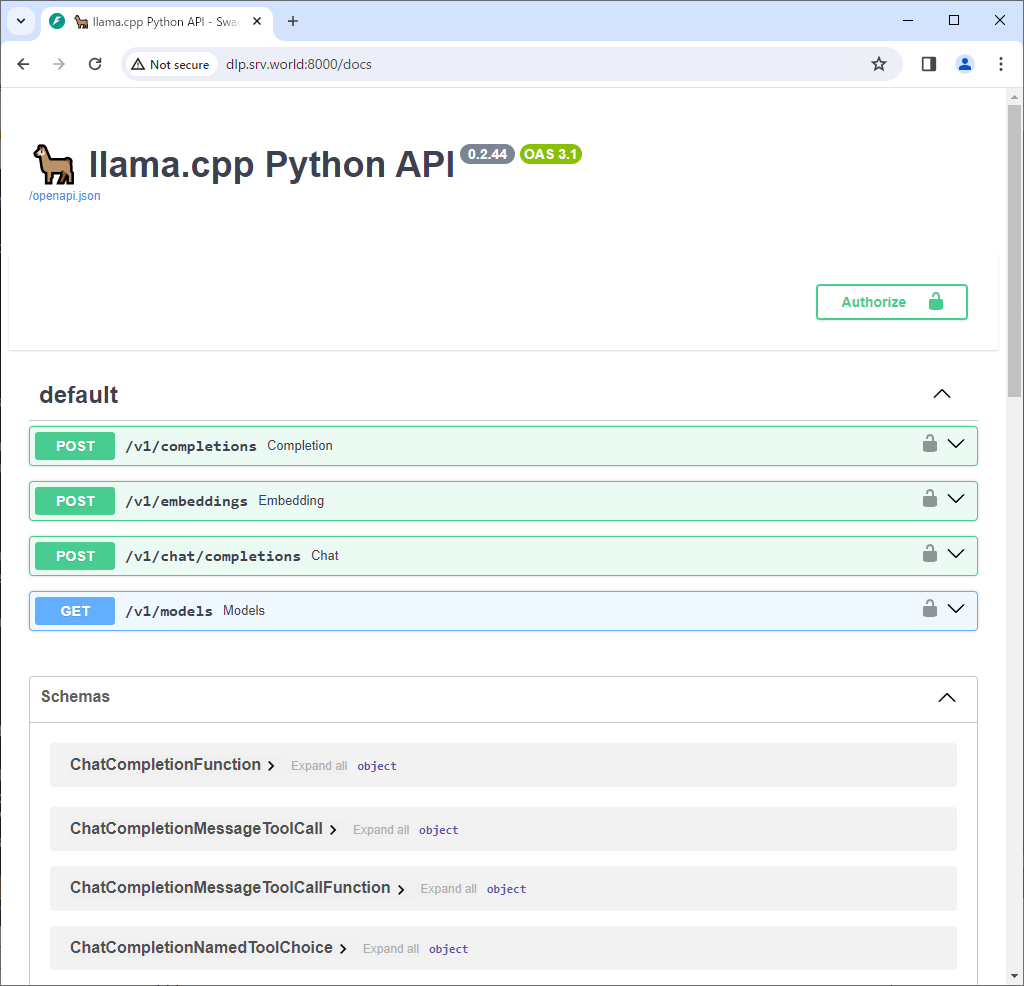

| [6] | ローカルネットワーク内の任意のコンピューターから [http://(サーバーのホスト名 または IP アドレス):8000/docs] にアクセスすると、ドキュメントを参照することができます。 |

|

| [7] | 簡単な質問を投入して動作確認します。 質問の内容や使用しているモデルによって、応答時間や応答内容はまちまちですが、応答時間については CPU のみで実行しているため、ある程度はかかります。 ちなみに、当例では、8 vCPU + 16G メモリのマシンで実行しています。 |

|

# 自己紹介して (llama) [cent@dlp ~]$ curl -s -XPOST -H 'Content-Type: application/json' localhost:8000/v1/chat/completions \ -d '{"messages": [{"role": "user", "content": "Introduce yourself."}]}' | jq

llama_print_timings: load time = 3522.87 ms

llama_print_timings: sample time = 49.41 ms / 80 runs ( 0.62 ms per token, 1619.01 tokens per second)

llama_print_timings: prompt eval time = 3522.79 ms / 16 tokens ( 220.17 ms per token, 4.54 tokens per second)

llama_print_timings: eval time = 32576.50 ms / 79 runs ( 412.36 ms per token, 2.43 tokens per second)

llama_print_timings: total time = 36412.87 ms / 95 tokens

INFO: 127.0.0.1:58630 - "POST /v1/chat/completions HTTP/1.1" 200 OK

{

"id": "chatcmpl-9bca7cf4-85f3-410b-b62d-510557855ddd",

"object": "chat.completion",

"created": 1708316132,

"model": "./llama-2-13b-chat.Q4_K_M.gguf",

"choices": [

{

"index": 0,

"message": {

"content": " Hello! My name is LLaMA, I'm a large language model trained by a team of researcher at Meta AI. My capabilities include conversational dialogue, natural language understanding, and the ability to generate human-like text. I can answer questions, provide information, and engage in discussion on a wide range of topics. What can I help you with today?",

"role": "assistant"

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 16,

"completion_tokens": 79,

"total_tokens": 95

}

}

# 広島について教えて (llama) [cent@dlp ~]$ curl -s -XPOST -H 'Content-Type: application/json' localhost:8000/v1/chat/completions \ -d '{"messages": [{"role": "user", "content": "Tell me about Hiroshima city, Japan."}]}' | jq | sed -e 's/\\n/\n/g'

llama_print_timings: load time = 3522.87 ms

llama_print_timings: sample time = 391.82 ms / 673 runs ( 0.58 ms per token, 1717.63 tokens per second)

llama_print_timings: prompt eval time = 3724.29 ms / 17 tokens ( 219.08 ms per token, 4.56 tokens per second)

llama_print_timings: eval time = 268500.27 ms / 672 runs ( 399.55 ms per token, 2.50 tokens per second)

llama_print_timings: total time = 275071.53 ms / 689 tokens

INFO: 127.0.0.1:42492 - "POST /v1/chat/completions HTTP/1.1" 200 OK

{

"id": "chatcmpl-53cbbe90-e8d1-4929-a171-8903bf6a5c5f",

"object": "chat.completion",

"created": 1708316206,

"model": "./llama-2-13b-chat.Q4_K_M.gguf",

"choices": [

{

"index": 0,

"message": {

"content": " Hiroshima is the capital of Hiroshima Prefecture in western Japan. The city was founded in 1589 on the ruins of a smaller castle town of the same name that had been established in 1501 by powerful warlord Mori Motonari. In 1647, Asano Nagatomo, one of the four daimyos (feudal lords) of the time, began construction of Hiroshima Castle to consolidate his power and to protect his territory from the powerful daimyos of neighboring regions.

Hiroshima has been destroyed many times by various calamities: floods, fires, and battles; but it always managed to rebuild itself. In 1871, the city became the prefectural capital and was renamed again to its present name. In 1945, however, the city suffered a fate worse than any previous disaster - an atomic bomb attack that destroyed almost all of its buildings and infrastructure and claimed over 140,000 lives. Today it has been rebuilt with modern architecture and technologies but still bears visible scars from its past disasters as well as remnants from when it was rebuilt after World War II ended in 1945.

Hiroshima has been rebuilt with modern architecture and technologies but still bears visible scars from its past disasters as well as remnants from when it was rebuilt after World War II ended in 1945. Today it has become one of Japan's most popular tourist destinations due to its rich history and culture, including the famous Atomic Bomb Dome - now a UNESCO World Heritage Site - which stands testament to the devastating power of nuclear weapons and serves as a reminder for world peace.

The city's history is marked by various disasters including floods and wars but it has always managed to rebuild itself stronger than before; today it stands not only as an example of resilience but also as an iconic symbol of hope and peace for generations to come.

Hiroshima is known for its many attractions including the Atomic Bomb Dome, Miyajima Island, Hiroshima Peace Memorial Park and Museum, and Okonomiyaki, a popular local dish made from batter and various fillings such as vegetables or meat. Visitors can also take part in various festivals and events that are held throughout the year such as the Hiroshima Festivals or Obon Festivals which celebrate traditional Japanese culture with music dance and food.

In conclusion, Hiroshima is more than just a place; it's an experience that combines history culture and modern technology to create something unique and memorable for all visitors who come to explore this remarkable city. From its humble beginnings as a small village on the banks of the Ota River to its current status as one of Japan's most popular tourist destinations - Hiroshima continues to inspire hope for generations yet to come.",

"role": "assistant"

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 23,

"completion_tokens": 672,

"total_tokens": 695

}

}

|

| [8] |

日本語モデルもいくつか提供されています。 ⇒ https://huggingface.co/mmnga/ELYZA-japanese-Llama-2-13b-fast-instruct-gguf |

|

(llama) [cent@dlp ~]$

curl -LO https://huggingface.co/mmnga/ELYZA-japanese-Llama-2-7b-fast-instruct-gguf/resolve/main/ELYZA-japanese-Llama-2-7b-fast-instruct-q4_K_M.gguf

(llama) [cent@dlp ~]$

(llama) [cent@dlp ~]$ python3 -m llama_cpp.server --model ./ELYZA-japanese-Llama-2-7b-fast-instruct-q4_K_M.gguf --host 0.0.0.0 --port 8000 &

curl -s -XPOST -H 'Content-Type: application/json' localhost:8000/v1/chat/completions \ -d '{"messages": [{"role": "user", "content": "次回の冬季オリンピックはどこで開催?"}]}' | jq

llama_print_timings: load time = 2772.83 ms

llama_print_timings: sample time = 123.61 ms / 161 runs ( 0.77 ms per token, 1302.50 tokens per second)

llama_print_timings: prompt eval time = 1825.80 ms / 16 tokens ( 114.11 ms per token, 8.76 tokens per second)

llama_print_timings: eval time = 32487.45 ms / 160 runs ( 203.05 ms per token, 4.92 tokens per second)

llama_print_timings: total time = 35121.34 ms / 176 tokens

INFO: 127.0.0.1:47190 - "POST /v1/chat/completions HTTP/1.1" 200 OK

{

"id": "chatcmpl-a5691170-4608-45d8-91d3-888f5fb63e04",

"object": "chat.completion",

"created": 1708090468,

"model": "./ELYZA-japanese-Llama-2-7b-fast-instruct-q4_K_M.gguf",

"choices": [

{

"index": 0,

"message": {

"content": " 冬季オリンピックの開催場所は、4年ごとに決まっています。\n次回2026年冬季オリンピックの開催都市は、9月13日にイタリアで開かれるIOC総会にて決定されます。\n\n現時点で立候補を表明している国・地域は以下です。\n- 米国 (カリフォルニア州)\n- カナダ (アルバータ州)\n- スイス (ジャングフーラ州)\n- 中国 (北京)\n- 日本 (新潟県)\n- 韓国のいずれかの地域 (仁川広域市・釜山広域市・平昌郡)",

"role": "assistant"

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 24,

"completion_tokens": 160,

"total_tokens": 184

}

}

# この応答内容は正しくないですね |

関連コンテンツ