llama-cpp-python : Install (GPU)2024/02/19 |

|

Install the Python binding [llama-cpp-python] for [llama.cpp], taht is the interface for Meta's Llama (Large Language Model Meta AI) model. |

|

| [1] | |

| [2] | |

| [3] | Install other required packages. |

|

root@dlp:~# apt -y install nvidia-cudnn python3-pip python3-dev python3-venv gcc g++ cmake jq |

| [4] | Login as a common user and prepare Python virtual environment to install [llama-cpp-python]. |

|

ubuntu@dlp:~$ python3 -m venv --system-site-packages ~/llama ubuntu@dlp:~$ source ~/llama/bin/activate (llama) ubuntu@dlp:~$ |

| [5] | Install [llama-cpp-python]. |

|

(llama) ubuntu@dlp:~$ export LLAMA_CUBLAS=1 FORCE_CMAKE=1 CMAKE_ARGS="-DLLAMA_CUBLAS=on" (llama) ubuntu@dlp:~$ pip3 install llama-cpp-python[server] Collecting llama-cpp-python[server] Downloading llama_cpp_python-0.2.44.tar.gz (36.6 MB) Installing build dependencies ... done Getting requirements to build wheel ... done Installing backend dependencies ... done Preparing metadata (pyproject.toml) ... done ..... ..... Successfully installed annotated-types-0.6.0 anyio-4.2.0 diskcache-5.6.3 exceptiongroup-1.2.0 fastapi-0.109.2 h11-0.14.0 llama-cpp-python-0.2.44 numpy-1.26.4 pydantic-2.6.1 pydantic-core-2.16.2 pydantic-settings-2.2.0 python-dotenv-1.0.1 sniffio-1.3.0 sse-starlette-2.0.0 starlette-0.36.3 starlette-context-0.3.6 typing-extensions-4.9.0 uvicorn-0.27.1 |

| [6] |

Download the GGUF format model that it can use them in [llama.cpp] and start [llama-cpp-python]. ⇒ https://huggingface.co/TheBloke/Llama-2-13B-chat-GGUF/tree/main ⇒ https://huggingface.co/TheBloke/Llama-2-70B-Chat-GGUF/tree/main |

|

(llama) ubuntu@dlp:~$

wget https://huggingface.co/TheBloke/Llama-2-13B-chat-GGUF/resolve/main/llama-2-13b-chat.Q4_K_M.gguf # [--n_gpu_layers] : number of layers to put on the GPU # -- specify [-1] to use all if you do not know (llama) ubuntu@dlp:~$ python3 -m llama_cpp.server --model ./llama-2-13b-chat.Q4_K_M.gguf --n_gpu_layers -1 --host 0.0.0.0 --port 8000 &

(llama) ubuntu@dlp:~$ ggml_init_cublas: GGML_CUDA_FORCE_MMQ: no

ggml_init_cublas: CUDA_USE_TENSOR_CORES: yes

ggml_init_cublas: found 1 CUDA devices:

Device 0: NVIDIA GeForce RTX 3060, compute capability 8.6, VMM: yes

llama_model_loader: loaded meta data with 19 key-value pairs and 363 tensors from ./llama-2-13b-chat.Q4_K_M.gguf (version GGUF V2)

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = llama

llama_model_loader: - kv 1: general.name str = LLaMA v2

llama_model_loader: - kv 2: llama.context_length u32 = 4096

llama_model_loader: - kv 3: llama.embedding_length u32 = 5120

llama_model_loader: - kv 4: llama.block_count u32 = 40

llama_model_loader: - kv 5: llama.feed_forward_length u32 = 13824

llama_model_loader: - kv 6: llama.rope.dimension_count u32 = 128

llama_model_loader: - kv 7: llama.attention.head_count u32 = 40

llama_model_loader: - kv 8: llama.attention.head_count_kv u32 = 40

llama_model_loader: - kv 9: llama.attention.layer_norm_rms_epsilon f32 = 0.000010

llama_model_loader: - kv 10: general.file_type u32 = 15

llama_model_loader: - kv 11: tokenizer.ggml.model str = llama

llama_model_loader: - kv 12: tokenizer.ggml.tokens arr[str,32000] = ["<unk>", "<s>", "</s>", "<0x00>", "<...

llama_model_loader: - kv 13: tokenizer.ggml.scores arr[f32,32000] = [0.000000, 0.000000, 0.000000, 0.0000...

llama_model_loader: - kv 14: tokenizer.ggml.token_type arr[i32,32000] = [2, 3, 3, 6, 6, 6, 6, 6, 6, 6, 6, 6, ...

llama_model_loader: - kv 15: tokenizer.ggml.bos_token_id u32 = 1

llama_model_loader: - kv 16: tokenizer.ggml.eos_token_id u32 = 2

llama_model_loader: - kv 17: tokenizer.ggml.unknown_token_id u32 = 0

llama_model_loader: - kv 18: general.quantization_version u32 = 2

llama_model_loader: - type f32: 81 tensors

llama_model_loader: - type q4_K: 241 tensors

llama_model_loader: - type q6_K: 41 tensors

llm_load_vocab: special tokens definition check successful ( 259/32000 ).

llm_load_print_meta: format = GGUF V2

llm_load_print_meta: arch = llama

llm_load_print_meta: vocab type = SPM

llm_load_print_meta: n_vocab = 32000

llm_load_print_meta: n_merges = 0

llm_load_print_meta: n_ctx_train = 4096

llm_load_print_meta: n_embd = 5120

llm_load_print_meta: n_head = 40

llm_load_print_meta: n_head_kv = 40

llm_load_print_meta: n_layer = 40

llm_load_print_meta: n_rot = 128

llm_load_print_meta: n_embd_head_k = 128

llm_load_print_meta: n_embd_head_v = 128

llm_load_print_meta: n_gqa = 1

llm_load_print_meta: n_embd_k_gqa = 5120

llm_load_print_meta: n_embd_v_gqa = 5120

llm_load_print_meta: f_norm_eps = 0.0e+00

llm_load_print_meta: f_norm_rms_eps = 1.0e-05

llm_load_print_meta: f_clamp_kqv = 0.0e+00

llm_load_print_meta: f_max_alibi_bias = 0.0e+00

llm_load_print_meta: n_ff = 13824

llm_load_print_meta: n_expert = 0

llm_load_print_meta: n_expert_used = 0

llm_load_print_meta: rope scaling = linear

llm_load_print_meta: freq_base_train = 10000.0

llm_load_print_meta: freq_scale_train = 1

llm_load_print_meta: n_yarn_orig_ctx = 4096

llm_load_print_meta: rope_finetuned = unknown

llm_load_print_meta: model type = 13B

llm_load_print_meta: model ftype = Q4_K - Medium

llm_load_print_meta: model params = 13.02 B

llm_load_print_meta: model size = 7.33 GiB (4.83 BPW)

llm_load_print_meta: general.name = LLaMA v2

llm_load_print_meta: BOS token = 1 '<s>'

llm_load_print_meta: EOS token = 2 '</s>'

llm_load_print_meta: UNK token = 0 '<unk>'

llm_load_print_meta: LF token = 13 '<0x0A>'

llm_load_tensors: ggml ctx size = 0.28 MiB

llm_load_tensors: offloading 40 repeating layers to GPU

llm_load_tensors: offloading non-repeating layers to GPU

llm_load_tensors: offloaded 41/41 layers to GPU

llm_load_tensors: CPU buffer size = 87.89 MiB

llm_load_tensors: CUDA0 buffer size = 7412.96 MiB

....................................................................................................

llama_new_context_with_model: n_ctx = 2048

llama_new_context_with_model: freq_base = 10000.0

llama_new_context_with_model: freq_scale = 1

llama_kv_cache_init: CUDA0 KV buffer size = 1600.00 MiB

llama_new_context_with_model: KV self size = 1600.00 MiB, K (f16): 800.00 MiB, V (f16): 800.00 MiB

llama_new_context_with_model: CUDA_Host input buffer size = 15.01 MiB

llama_new_context_with_model: CUDA0 compute buffer size = 204.00 MiB

llama_new_context_with_model: CUDA_Host compute buffer size = 10.00 MiB

llama_new_context_with_model: graph splits (measure): 3

AVX = 1 | AVX_VNNI = 0 | AVX2 = 1 | AVX512 = 0 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 1 | SSE3 = 1 | SSSE3 = 1 | VSX = 0 | MATMUL_INT8 = 0 |

Model metadata: {'tokenizer.ggml.unknown_token_id': '0', 'tokenizer.ggml.eos_token_id': '2', 'general.architecture': 'llama', 'llama.context_length': '4096', 'general.name': 'LLaMA v2', 'llama.embedding_length': '5120', 'llama.feed_forward_length': '13824', 'llama.attention.layer_norm_rms_epsilon': '0.000010', 'llama.rope.dimension_count': '128', 'llama.attention.head_count': '40', 'tokenizer.ggml.bos_token_id': '1', 'llama.block_count': '40', 'llama.attention.head_count_kv': '40', 'general.quantization_version': '2', 'tokenizer.ggml.model': 'llama', 'general.file_type': '15'}

INFO: Started server process [1499]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://0.0.0.0:8000 (Press CTRL+C to quit)

|

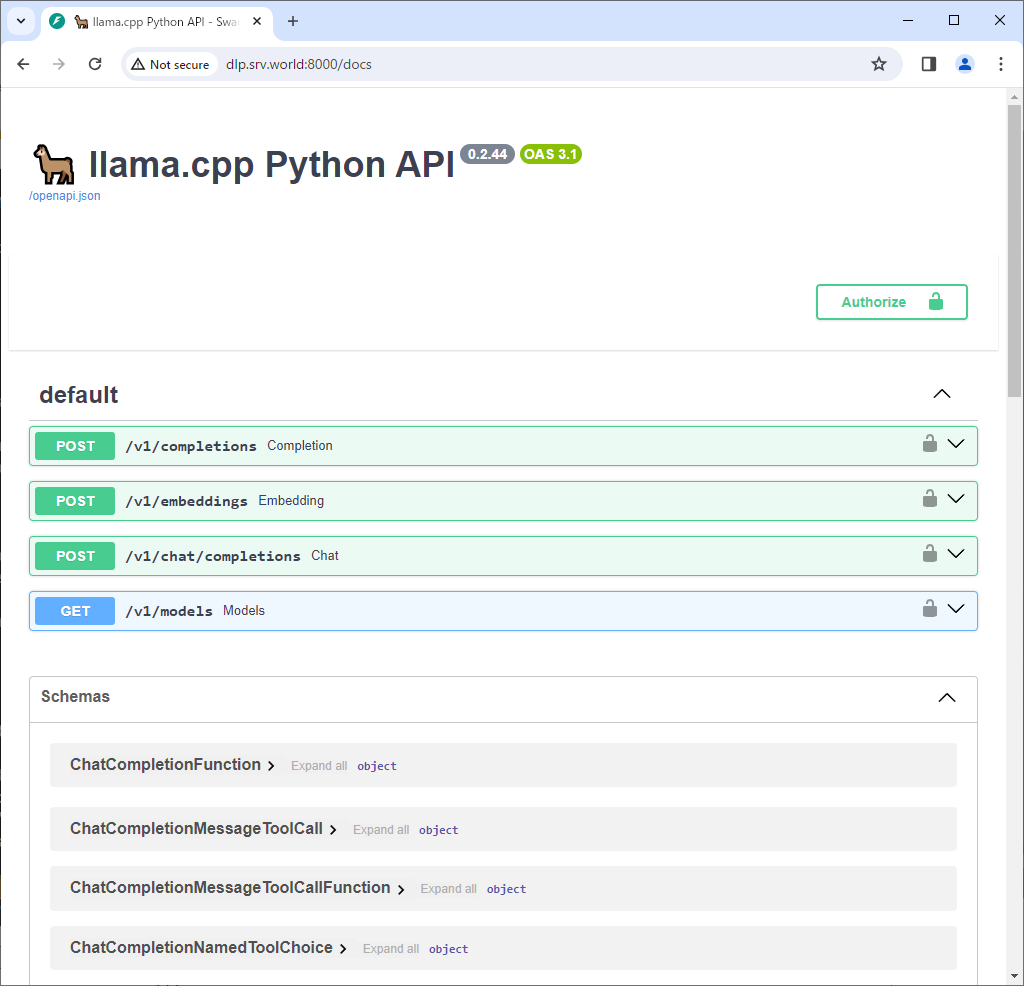

| [7] | You can read the documentation by accessing [http://(server hostname or IP address):8000/docs] from any computer in your local network. |

|

| [8] | Post some questions like follows and verify it works normally. The response time and response contents will vary depending on the question and the model used. By the way, this example is running on a machine with 8 vCPU + 16G memory + GeForce RTX 3060 (12G). When asked the same question on the same machine, the processing speed is more than 5 times faster than this case without GPU. |

|

(llama) ubuntu@dlp:~$ curl -s -XPOST -H 'Content-Type: application/json' localhost:8000/v1/chat/completions \ -d '{"messages": [{"role": "user", "content": "Who are you?"}]}' | jq

llama_print_timings: load time = 193.01 ms

llama_print_timings: sample time = 47.93 ms / 85 runs ( 0.56 ms per token, 1773.31 tokens per second)

llama_print_timings: prompt eval time = 192.90 ms / 15 tokens ( 12.86 ms per token, 77.76 tokens per second)

llama_print_timings: eval time = 2345.54 ms / 84 runs ( 27.92 ms per token, 35.81 tokens per second)

llama_print_timings: total time = 2809.73 ms / 99 tokens

INFO: 127.0.0.1:36364 - "POST /v1/chat/completions HTTP/1.1" 200 OK

{

"id": "chatcmpl-eb23e34e-f5d9-4125-8cae-cffbd47fc62e",

"object": "chat.completion",

"created": 1708322027,

"model": "./llama-2-13b-chat.Q4_K_M.gguf",

"choices": [

{

"index": 0,

"message": {

"content": " Hello! I'm LLaMA, an AI assistant developed by Meta AI that can understand and respond to human input in a conversational manner. I am here to help answer any questions you may have to the best of my ability. I can provide information on a wide range of topics, from science and history to entertainment and culture. Is there something specific you would like to know or discuss?",

"role": "assistant"

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 15,

"completion_tokens": 84,

"total_tokens": 99

}

}

(llama) ubuntu@dlp:~$ curl -s -XPOST -H 'Content-Type: application/json' localhost:8000/v1/chat/completions \ -d '{"messages": [{"role": "user", "content": "Tell me about Hiroshima city, Japan."}]}' | jq | sed -e 's/\\n/\n/g'

llama_print_timings: load time = 193.01 ms

llama_print_timings: sample time = 444.17 ms / 826 runs ( 0.54 ms per token, 1859.66 tokens per second)

llama_print_timings: prompt eval time = 227.16 ms / 17 tokens ( 13.36 ms per token, 74.84 tokens per second)

llama_print_timings: eval time = 23981.41 ms / 825 runs ( 29.07 ms per token, 34.40 tokens per second)

llama_print_timings: total time = 27050.95 ms / 842 tokens

INFO: 127.0.0.1:52610 - "POST /v1/chat/completions HTTP/1.1" 200 OK

{

"id": "chatcmpl-1ccabca1-bfdb-483f-8753-41e0b850d010",

"object": "chat.completion",

"created": 1708322099,

"model": "./llama-2-13b-chat.Q4_K_M.gguf",

"choices": [

{

"index": 0,

"message": {

"content": " Sure, I'd be happy to help! Here are some key points about Hiroshima City, Japan:

1. Location: Hiroshima is located in the Chugoku region of western Japan and is the largest city in the region. It is situated on the banks of the Ota River and is known for its beautiful scenery and vibrant cultural scene.

2. History: Hiroshima was a small fortified city during the Edo period (1603-1867) and was known for its castle and cultural events. However, the city rose to prominence during World War II when it became a major center for military production and was selected as one of the targets for atomic bombings by the United States in August 1945. The atomic bombing of Hiroshima on August 6, 1945, killed an estimated 70,000 to 80,000 people instantly, and another 70,000 were injured or died from radiation sickness in the months and years following the attack. Today, Hiroshima is known for its peace memorial park and museum, which commemorate the victims of the atomic bombing and promote peace and nuclear disarmament.

3. Attractions: Some of the top attractions in Hiroshima include the Hiroshima Peace Memorial Park and Museum, which features a memorial to the victims of the atomic bombing and a museum that explores the history and effects of nuclear weapons. Other popular attractions include the Atomic Bomb Dome (which was designated as a UNESCO World Heritage Site in 1996), the Hiroshima Museum of Art, and the Miyajima Island, which is famous for its beautiful scenery and historic temples and shrines.

4. Food: Hiroshima is known for its local cuisine, which includes dishes such as okonomiyaki (a savory pancake made with batter and various ingredients such as vegetables, meat, and seafood), oysters, and mizuna (a type of greens). The city is also famous for its sake (Japanese rice wine) and shochu (a type of spirit made from barley or sweet potato).

5. Climate: Hiroshima has a humid subtropical climate with hot summers and mild winters. The average temperature in August (the hottest month) is around 28°C (82°F), while the average temperature in January (the coldest month) is around 2°C (36°F).

6. Population: As of 2020, the population of Hiroshima City is approximately 1.2 million people. The city has a diverse population, with a mix of old and new neighborhoods and a thriving cultural scene.

7. Transportation: Hiroshima has a well-developed transportation system, with a busy airport, a major train station, and a network of buses and trams that connect the city to other parts of Japan and the rest of Asia. The city is also known for its bicycle-friendly streets and pedestrian-friendly atmosphere.

8. Economy: Hiroshima has a diverse economy, with a mix of industries such as manufacturing, healthcare, and tourism. The city is home to many major companies, including Mazda, Yamato Holdings, and Hiroshima Electric Railway.

Overall, Hiroshima City is a vibrant and historic city that offers a unique blend of traditional Japanese culture and modern attractions. It is a popular destination for tourists and is known for its beautiful scenery, delicious food, and rich cultural heritage.",

"role": "assistant"

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 23,

"completion_tokens": 825,

"total_tokens": 848

}

}

|

Matched Content