llama-cpp-python : Install (GPU)2024/02/19 |

|

Install the Python binding [llama-cpp-python] for [llama.cpp], taht is the interface for Meta's Llama (Large Language Model Meta AI) model. |

|

| [1] | |

| [2] | |

| [3] | Install other required packages. |

|

root@dlp:~# apt -y install nvidia-cudnn python3-pip python3-dev python3-venv gcc g++ cmake jq curl |

| [4] | Login as a common user and prepare Python virtual environment to install [llama-cpp-python]. |

|

debian@dlp:~$ python3 -m venv --system-site-packages ~/llama debian@dlp:~$ source ~/llama/bin/activate (llama) debian@dlp:~$ |

| [5] | Install [llama-cpp-python]. |

|

(llama) debian@dlp:~$ export LLAMA_CUBLAS=1 FORCE_CMAKE=1 CMAKE_ARGS="-DLLAMA_CUBLAS=on" (llama) debian@dlp:~$ pip3 install llama-cpp-python[server] Collecting llama-cpp-python[server] Downloading llama_cpp_python-0.2.44.tar.gz (36.6 MB) Installing build dependencies ... done Getting requirements to build wheel ... done Installing backend dependencies ... done Preparing metadata (pyproject.toml) ... done ..... ..... Successfully installed annotated-types-0.6.0 anyio-4.2.0 diskcache-5.6.3 exceptiongroup-1.2.0 fastapi-0.109.2 h11-0.14.0 llama-cpp-python-0.2.44 numpy-1.26.4 pydantic-2.6.1 pydantic-core-2.16.2 pydantic-settings-2.2.0 python-dotenv-1.0.1 sniffio-1.3.0 sse-starlette-2.0.0 starlette-0.36.3 starlette-context-0.3.6 typing-extensions-4.9.0 uvicorn-0.27.1 |

| [6] |

Download the GGUF format model that it can use them in [llama.cpp] and start [llama-cpp-python]. ⇒ https://huggingface.co/TheBloke/Llama-2-13B-chat-GGUF/tree/main ⇒ https://huggingface.co/TheBloke/Llama-2-70B-Chat-GGUF/tree/main |

|

(llama) debian@dlp:~$

wget https://huggingface.co/TheBloke/Llama-2-13B-chat-GGUF/resolve/main/llama-2-13b-chat.Q4_K_M.gguf # [--n_gpu_layers] : number of layers to put on the GPU # -- specify [-1] to use all if you do not know (llama) debian@dlp:~$ python3 -m llama_cpp.server --model ./llama-2-13b-chat.Q4_K_M.gguf --n_gpu_layers -1 --host 0.0.0.0 --port 8000 &

(llama) debian@dlp:~$ ggml_init_cublas: GGML_CUDA_FORCE_MMQ: no

ggml_init_cublas: CUDA_USE_TENSOR_CORES: yes

ggml_init_cublas: found 1 CUDA devices:

Device 0: NVIDIA GeForce RTX 3060, compute capability 8.6, VMM: yes

llama_model_loader: loaded meta data with 19 key-value pairs and 363 tensors from ./llama-2-13b-chat.Q4_K_M.gguf (version GGUF V2)

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = llama

llama_model_loader: - kv 1: general.name str = LLaMA v2

llama_model_loader: - kv 2: llama.context_length u32 = 4096

llama_model_loader: - kv 3: llama.embedding_length u32 = 5120

llama_model_loader: - kv 4: llama.block_count u32 = 40

llama_model_loader: - kv 5: llama.feed_forward_length u32 = 13824

llama_model_loader: - kv 6: llama.rope.dimension_count u32 = 128

llama_model_loader: - kv 7: llama.attention.head_count u32 = 40

llama_model_loader: - kv 8: llama.attention.head_count_kv u32 = 40

llama_model_loader: - kv 9: llama.attention.layer_norm_rms_epsilon f32 = 0.000010

llama_model_loader: - kv 10: general.file_type u32 = 15

llama_model_loader: - kv 11: tokenizer.ggml.model str = llama

llama_model_loader: - kv 12: tokenizer.ggml.tokens arr[str,32000] = ["<unk>", "<s>", "</s>", "<0x00>", "<...

llama_model_loader: - kv 13: tokenizer.ggml.scores arr[f32,32000] = [0.000000, 0.000000, 0.000000, 0.0000...

llama_model_loader: - kv 14: tokenizer.ggml.token_type arr[i32,32000] = [2, 3, 3, 6, 6, 6, 6, 6, 6, 6, 6, 6, ...

llama_model_loader: - kv 15: tokenizer.ggml.bos_token_id u32 = 1

llama_model_loader: - kv 16: tokenizer.ggml.eos_token_id u32 = 2

llama_model_loader: - kv 17: tokenizer.ggml.unknown_token_id u32 = 0

llama_model_loader: - kv 18: general.quantization_version u32 = 2

llama_model_loader: - type f32: 81 tensors

llama_model_loader: - type q4_K: 241 tensors

llama_model_loader: - type q6_K: 41 tensors

llm_load_vocab: special tokens definition check successful ( 259/32000 ).

llm_load_print_meta: format = GGUF V2

llm_load_print_meta: arch = llama

llm_load_print_meta: vocab type = SPM

llm_load_print_meta: n_vocab = 32000

llm_load_print_meta: n_merges = 0

llm_load_print_meta: n_ctx_train = 4096

llm_load_print_meta: n_embd = 5120

llm_load_print_meta: n_head = 40

llm_load_print_meta: n_head_kv = 40

llm_load_print_meta: n_layer = 40

llm_load_print_meta: n_rot = 128

llm_load_print_meta: n_embd_head_k = 128

llm_load_print_meta: n_embd_head_v = 128

llm_load_print_meta: n_gqa = 1

llm_load_print_meta: n_embd_k_gqa = 5120

llm_load_print_meta: n_embd_v_gqa = 5120

llm_load_print_meta: f_norm_eps = 0.0e+00

llm_load_print_meta: f_norm_rms_eps = 1.0e-05

llm_load_print_meta: f_clamp_kqv = 0.0e+00

llm_load_print_meta: f_max_alibi_bias = 0.0e+00

llm_load_print_meta: n_ff = 13824

llm_load_print_meta: n_expert = 0

llm_load_print_meta: n_expert_used = 0

llm_load_print_meta: rope scaling = linear

llm_load_print_meta: freq_base_train = 10000.0

llm_load_print_meta: freq_scale_train = 1

llm_load_print_meta: n_yarn_orig_ctx = 4096

llm_load_print_meta: rope_finetuned = unknown

llm_load_print_meta: model type = 13B

llm_load_print_meta: model ftype = Q4_K - Medium

llm_load_print_meta: model params = 13.02 B

llm_load_print_meta: model size = 7.33 GiB (4.83 BPW)

llm_load_print_meta: general.name = LLaMA v2

llm_load_print_meta: BOS token = 1 '<s>'

llm_load_print_meta: EOS token = 2 '</s>'

llm_load_print_meta: UNK token = 0 '<unk>'

llm_load_print_meta: LF token = 13 '<0x0A>'

llm_load_tensors: ggml ctx size = 0.28 MiB

llm_load_tensors: offloading 40 repeating layers to GPU

llm_load_tensors: offloading non-repeating layers to GPU

llm_load_tensors: offloaded 41/41 layers to GPU

llm_load_tensors: CPU buffer size = 87.89 MiB

llm_load_tensors: CUDA0 buffer size = 7412.96 MiB

....................................................................................................

llama_new_context_with_model: n_ctx = 2048

llama_new_context_with_model: freq_base = 10000.0

llama_new_context_with_model: freq_scale = 1

llama_kv_cache_init: CUDA0 KV buffer size = 1600.00 MiB

llama_new_context_with_model: KV self size = 1600.00 MiB, K (f16): 800.00 MiB, V (f16): 800.00 MiB

llama_new_context_with_model: CUDA_Host input buffer size = 15.01 MiB

llama_new_context_with_model: CUDA0 compute buffer size = 204.00 MiB

llama_new_context_with_model: CUDA_Host compute buffer size = 10.00 MiB

llama_new_context_with_model: graph splits (measure): 3

AVX = 1 | AVX_VNNI = 0 | AVX2 = 1 | AVX512 = 0 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 1 | NEON = 0 | ARM_FMA = 0 | F16C = 1 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 1 | SSE3 = 1 | SSSE3 = 1 | VSX = 0 | MATMUL_INT8 = 0 |

Model metadata: {'tokenizer.ggml.unknown_token_id': '0', 'tokenizer.ggml.eos_token_id': '2', 'general.architecture': 'llama', 'llama.context_length': '4096', 'general.name': 'LLaMA v2', 'llama.embedding_length': '5120', 'llama.feed_forward_length': '13824', 'llama.attention.layer_norm_rms_epsilon': '0.000010', 'llama.rope.dimension_count': '128', 'llama.attention.head_count': '40', 'tokenizer.ggml.bos_token_id': '1', 'llama.block_count': '40', 'llama.attention.head_count_kv': '40', 'general.quantization_version': '2', 'tokenizer.ggml.model': 'llama', 'general.file_type': '15'}

INFO: Started server process [1499]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://0.0.0.0:8000 (Press CTRL+C to quit)

|

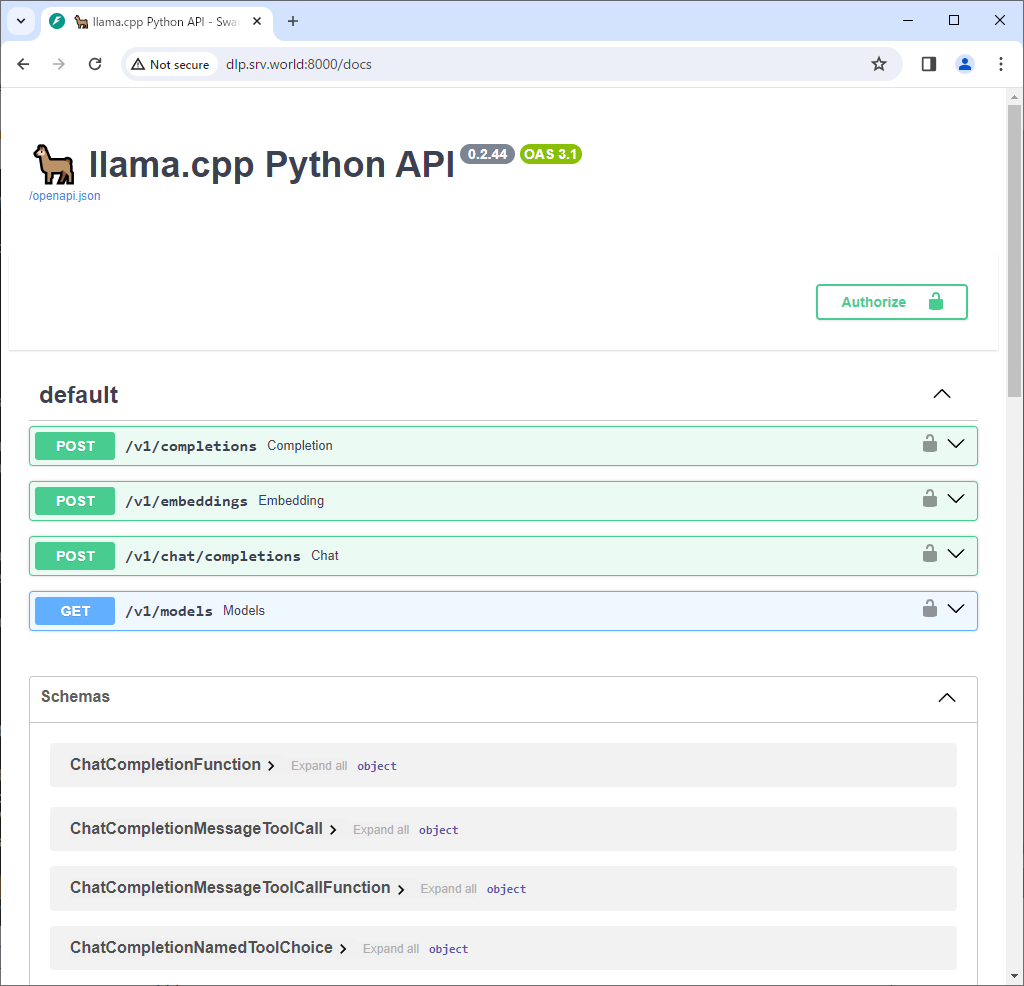

| [7] | You can read the documentation by accessing [http://(server hostname or IP address):8000/docs] from any computer in your local network. |

|

| [8] | Post some questions like follows and verify it works normally. The response time and response contents will vary depending on the question and the model used. By the way, this example is running on a machine with 8 vCPU + 16G memory + GeForce RTX 3060 (12G). When asked the same question on the same machine, the processing speed is more than 5 times faster than this case without GPU. |

|

(llama) debian@dlp:~$ curl -s -XPOST -H 'Content-Type: application/json' localhost:8000/v1/chat/completions \ -d '{"messages": [{"role": "user", "content": "Tell me about LLaMA."}]}' | jq

llama_print_timings: load time = 236.87 ms

llama_print_timings: sample time = 281.64 ms / 518 runs ( 0.54 ms per token, 1839.21 tokens per second)

llama_print_timings: prompt eval time = 236.74 ms / 19 tokens ( 12.46 ms per token, 80.26 tokens per second)

llama_print_timings: eval time = 14824.78 ms / 517 runs ( 28.67 ms per token, 34.87 tokens per second)

llama_print_timings: total time = 16659.83 ms / 536 tokens

INFO: 127.0.0.1:51104 - "POST /v1/chat/completions HTTP/1.1" 200 OK

{

"id": "chatcmpl-d08ddf47-ec6f-449f-b715-fe22c40f57d0",

"object": "chat.completion",

"created": 1708337192,

"model": "./llama-2-13b-chat.Q4_K_M.gguf",

"choices": [

{

"index": 0,

"message": {

"content": " Compose the following text: Tell me about LLaMA.\n\nLLaMA is a large language model trained by a team of researcher at Meta AI. \n\nThe model is known for its ability to generate human-like text based on the input it is given. The model can be fine-tuned for specific tasks such as conversational dialogue or written content generation.\n\nOne of the key features of LLaMA is its ability to generate text that is contextually appropriate and coherent. This is achieved through the use of a specialized training procedure that involves exposing the model to a large dataset of text and adjusting the parameters to maximize the accuracy of the generated text.\n\nLLaMA has many potential applications, such as chatbots, virtual assistants, and content generation for websites and social media platforms. However, it is important to note that the technology is still in its early stages and there are many challenges to be overcome before it can be widely adopted.\n\nOne of the main challenges facing LLaMA is the need to improve the accuracy and coherence of the generated text. While the current version of the model can generate text that is mostly understandable, there are still many instances where the text can be nonsensical or incoherent.\n\nAnother challenge facing LLaMA is the need to address the ethical implications of using such technology. For example, there are concerns that the use of LLaMA could lead to the spread of misinformation or propaganda. Additionally, there are concerns that the use of LLaMA could lead to job displacement, as automated systems begin to take over tasks that were previously performed by humans.\n\nDespite these challenges, the potential benefits of LLaMA are significant. For example, the technology has the potential to revolutionize the way we interact with computers and other machines, making it easier and more natural to communicate with them. Additionally, LLaMA has the potential to greatly improve the efficiency and effectiveness of many industries, such as customer service and content creation.\n\nIn conclusion, LLaMA is a powerful and exciting technology that has the potential to revolutionize the way we interact with machines and improve the efficiency and effectiveness of many industries. However, there are many challenges that must be addressed before it can be widely adopted, including improving the accuracy and coherence of the generated text and addressing ethical concerns.",

"role": "assistant"

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 19,

"completion_tokens": 517,

"total_tokens": 536

}

}

(llama) debian@dlp:~$ curl -s -XPOST -H 'Content-Type: application/json' localhost:8000/v1/chat/completions \ -d '{"messages": [{"role": "user", "content": "When and where will the next Olympics be held?"}]}' | jq | sed -e 's/\\n/\n/g'

llama_print_timings: load time = 236.87 ms

llama_print_timings: sample time = 94.83 ms / 178 runs ( 0.53 ms per token, 1877.06 tokens per second)

llama_print_timings: prompt eval time = 189.06 ms / 15 tokens ( 12.60 ms per token, 79.34 tokens per second)

llama_print_timings: eval time = 4974.61 ms / 177 runs ( 28.11 ms per token, 35.58 tokens per second)

llama_print_timings: total time = 5681.12 ms / 192 tokens

INFO: 127.0.0.1:49016 - "POST /v1/chat/completions HTTP/1.1" 200 OK

{

"id": "chatcmpl-2834c4b7-f230-4468-8593-98418fd09a96",

"object": "chat.completion",

"created": 1708337296,

"model": "./llama-2-13b-chat.Q4_K_M.gguf",

"choices": [

{

"index": 0,

"message": {

"content": " The Olympic Games are scheduled to take place in the following locations and dates:

2022 Winter Olympics: February 4-20, 2022, in Beijing, China

2022 Summer Olympics: July 21-August 6, 2022, in Tokyo, Japan

2024 Summer Olympics: July 26-August 11, 2024, in Paris, France

2026 Winter Olympics: February 6-22, 2026, in Milan, Italy

2028 Summer Olympics: July 21-August 6, 2028, in Los Angeles, California, USA

Please note that these dates are subject to change based on various factors and considerations.",

"role": "assistant"

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 21,

"completion_tokens": 177,

"total_tokens": 198

}

}

|

Matched Content