OKD 4 : Add Compute Nodes2022/04/19 |

|

Install OKD 4 that is the upstream version of Red Hat OpenShift 4.

Add Compute Nodes on the existing OKD4 Cluster.

On this example, add a Node [node-0 (10.0.0.60)] to the existing Cluster like follows.

--------------+----------------+-----------------+--------------

|10.0.0.25 | |10.0.0.24

+-------------+-------------+ | +--------------+-------------+

| [mgr.okd4.srv.world] | | | [bootstrap.okd4.srv.world] |

| Manager Node | | | Bootstrap Node |

| DNS | | | |

| Nginx | | | |

+---------------------------+ | +----------------------------+

|

--------------+----------------+-----------------+--------------

|10.0.0.40 | |10.0.0.41

+-------------+-------------+ | +--------------+-------------+

| [master-0.okd4.srv.world] | | | [master-1.okd4.srv.world] |

| Control Plane#1 | | | Control Plane#2 |

| | | | |

| | | | |

+---------------------------+ | +----------------------------+

|

--------------+----------------+-----------------+--------------

|10.0.0.42 |10.0.0.60

+-------------+-------------+ +--------------+-------------+

| [master-2.okd4.srv.world] | | [node-0.okd4.srv.world] |

| Control Plane#3 | | Compute Node#1 |

| | | |

| | | |

+---------------------------+ +----------------------------+

|

The system minimum requirements are follows. (by official doc)* Bootstrap Node ⇒ 4 CPU, 16 GB RAM, 100 GB Storage, Fedora CoreOS * Control Plane Node ⇒ 4 CPU, 16 GB RAM, 100 GB Storage, Fedora CoreOS * Compute Node ⇒ 2 CPU, 8 GB RAM, 100 GB Storage, Fedora CoreOS |

|

|

Start Bootstrap Node before configuring.

|

|

| [1] | On Manager Node, add settings for new Compute Node. |

|

[root@mgr ~]#

vi /etc/hosts # add hostname (any name you like) and IP address for a new Node 10.0.0.24 bootstrap 10.0.0.25 api api-int mgr 10.0.0.40 master-0 etcd-0 _etcd-server-ssl._tcp 10.0.0.41 master-1 etcd-1 _etcd-server-ssl._tcp 10.0.0.42 master-1 etcd-2 _etcd-server-ssl._tcp 10.0.0.60 node-0

[root@mgr ~]#

vi /etc/nginx/nginx.conf # add settings for new node stream { upstream k8s-api { server 10.0.0.24:6443; server 10.0.0.40:6443; server 10.0.0.41:6443; server 10.0.0.42:6443; } upstream machine-config { server 10.0.0.24:22623; server 10.0.0.40:22623; server 10.0.0.41:22623; server 10.0.0.42:22623; } upstream ingress-http { server 10.0.0.40:80; server 10.0.0.41:80; server 10.0.0.42:80; server 10.0.0.60:80; } upstream ingress-https { server 10.0.0.40:443; server 10.0.0.41:443; server 10.0.0.42:443; server 10.0.0.60:443; } upstream ingress-health { server 10.0.0.40:1936; server 10.0.0.41:1936; server 10.0.0.42:1936; server 10.0.0.60:1936; } server { listen 6443; proxy_pass k8s-api; } server { listen 22623; proxy_pass machine-config; } server { listen 80; proxy_pass ingress-http; } server { listen 443; proxy_pass ingress-https; } server { listen 1936; proxy_pass ingress-health; } }[root@mgr ~]# systemctl restart dnsmasq nginx |

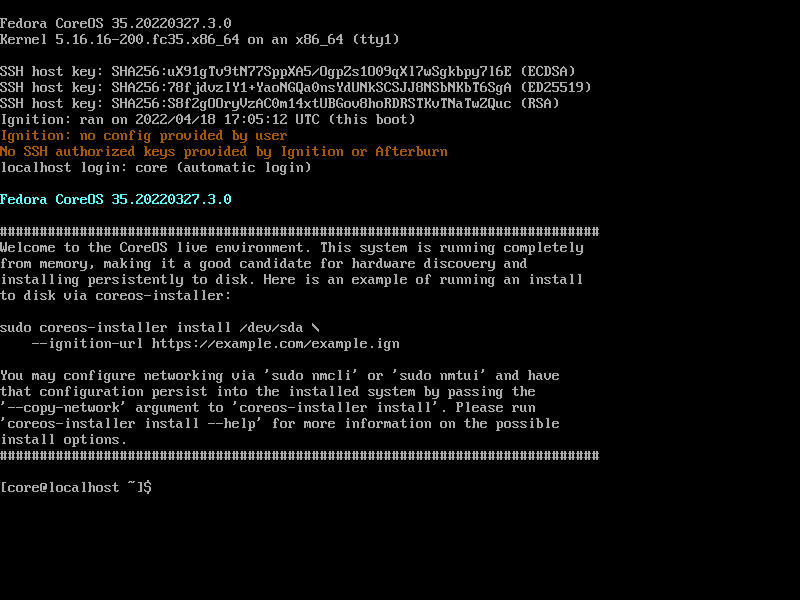

| [2] | Configure Compute Node. As with other Nodes, set Fedora CoreOS DVD and power on computer. |

|

|

| [3] | After booting computer, configure with the same way of other Nodes. (different points are IP address and ignition file name) |

|

[core@localhost ~]# nmcli device DEVICE TYPE STATE CONNECTION ens160 ethernet connected Wired connection 1 lo loopback unmanaged --

[core@localhost ~]#

[core@localhost ~]# nmcli connection add type ethernet autoconnect yes con-name ens160 ifname ens160 [core@localhost ~]# nmcli connection modify ens160 ipv4.addresses 10.0.0.60/24 ipv4.method manual [core@localhost ~]# nmcli connection modify ens160 ipv4.dns 10.0.0.25 [core@localhost ~]# nmcli connection modify ens160 ipv4.gateway 10.0.0.1 [core@localhost ~]# nmcli connection modify ens160 ipv4.dns-search okd4.srv.world [core@localhost ~]# nmcli connection up ens160

sudo fdisk -l Disk /dev/nvme0n1: 100 GiB, 107374182400 bytes, 209715200 sectors Disk model: VMware Virtual NVMe Disk Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes ..... ..... # on Compute Node, specify [worker.ign] [core@localhost ~]# sudo coreos-installer install /dev/nvme0n1 --ignition-url=http://10.0.0.25:8080/worker.ign --insecure-ignition --copy-network Installing Fedora CoreOS 35.20220327.3.0 x86_64 (512-byte sectors) Read disk 2.5 GiB/2.5 GiB (100%) Writing Ignition config Copying networking configuration from /etc/NetworkManager/system-connections/ Copying /etc/NetworkManager/system-connections/ens160.nmconnection to installed system Install complete. # after completing installation, eject DVD and restart computer, and proceed to next step # * installation process continues automatically after restarting computer [core@localhost ~]# sudo reboot |

| [4] | Some minutes later, Move to Manager Node and approve CSR (Certificate Signing Requests) that is [Pending] status from Compute Node, then Compute Node is added to cluster. |

|

[root@mgr ~]# oc get csr NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION csr-2ds8c 68m kubernetes.io/kubelet-serving system:node:master-2.okd4.srv.world <none> Approved,Issued csr-7pwq5 80m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued csr-8k4g7 80m kubernetes.io/kubelet-serving system:node:master-0.okd4.srv.world <none> Approved,Issued csr-gjkns 72m kubernetes.io/kubelet-serving system:node:master-1.okd4.srv.world <none> Approved,Issued csr-hx5dq 73m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued csr-mgltg 73m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued csr-nhnw4 68m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued csr-q9pnq 2m35s kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending csr-r6ltf 2m51s kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Pending csr-rn4dn 80m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued csr-ww2gb 68m kubernetes.io/kube-apiserver-client-kubelet system:serviceaccount:openshift-machine-config-operator:node-bootstrapper <none> Approved,Issued system:openshift:openshift-authenticator-4zthw 77m kubernetes.io/kube-apiserver-client system:serviceaccount:openshift-authentication-operator:authentication-operator <none> Approved,Issued system:openshift:openshift-monitoring-fs7ws 76m kubernetes.io/kube-apiserver-client system:serviceaccount:openshift-monitoring:cluster-monitoring-operator <none> Approved,Issued

[root@mgr ~]#

oc adm certificate approve csr-q9pnq certificatesigningrequest.certificates.k8s.io/csr-q9pnq approved [root@mgr ~]# oc adm certificate approve csr-r6ltf certificatesigningrequest.certificates.k8s.io/csr-r6ltf approved # Compute node is added [root@mgr ~]# oc get nodes NAME STATUS ROLES AGE VERSION master-0.okd4.srv.world Ready master,worker 82m v1.23.3+759c22b master-1.okd4.srv.world Ready master,worker 74m v1.23.3+759c22b master-2.okd4.srv.world Ready master,worker 70m v1.23.3+759c22b node-0.okd4.srv.world NotReady worker 15s v1.23.3+759c22b # few minutes later, STATUS turns to Ready [root@mgr ~]# oc get nodes NAME STATUS ROLES AGE VERSION master-0.okd4.srv.world Ready master,worker 83m v1.23.3+759c22b master-1.okd4.srv.world Ready master,worker 75m v1.23.3+759c22b master-2.okd4.srv.world Ready master,worker 71m v1.23.3+759c22b node-0.okd4.srv.world Ready worker 86s v1.23.3+759c22b |

| [5] | After adding Compute Node, it's OK to shutdown Bootstrap Node. To access to a new Compute Node, it's possible to ssh with [core] user as with other nodes. |

|

[root@mgr ~]# ssh core@node-0 hostname node-0.okd4.srv.world |

Matched Content